Making scientific code run faster

This post by Jon Hill, Imperial College London first appeared on EPCC's blog.

I always jump at the chance to work with EPCC because the people there have a wealth of experience of making scientific code go even faster. Whilst this is extremely important to our research, we don't have the time to do both science and improve code performance.

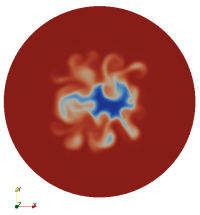

The Applied Modelling and Computation Group (AMCG), which is part of the Earth Sciences and Engineering department at Imperial College London, carries out research in a number of scientific fields, from ocean modelling, through computational fluid dynamics, to modelling nuclear reactors. For all this research we use one of three codes: Fluidity, Radiant or FEMDEM.

I work on Fluidity, the finite element framework that is used for simulating fluid dynamics, ocean modelling, tsunami modelling, landslides, and sedimentary systems.

We at AMCG have worked with EPCC on a number of projects. Most recently via its APOS-EU project and a HECToR dCSE (distributed Computational Science and Engineering) project. Both of these projects focused on how to make Fluidity go even faster and scale further on current cutting-edge hardware.

The idea was to restructure our memory accesses such that they performed well on NUMA (Non-Uniform Memory Access) machines, where data is placed close to the processing core that needs it. If data is stored on memory on a neighbouring core you get a performance hit as the data is fetched. We had ideas on how to do this, but we needed experienced software engineers to carry out and test our ideas. This is where EPCC comes in - not only can they provide the software engineers, they can help improve our initial ideas. In addition, the APOS-EU project was also looking to the future and how we are going to write code for future architectures (hint: you don't, you let the computer do it for you).

Performance increase

Both projects went very well, achieving what was set out. The only disappointment is that for every performance increase that was done, another bottleneck appeared, limiting the final performance improvement - but there was an improvement in the end. Carrying out this work means we can now run on more than 32,000 cores on HECToR and we gained up to a 20% decrease in runtime. Moreover, we understand our performance bottlenecks better now.

It was a pleasure to collaborate with my former colleagues and friends. Fluidity is that bit faster, smarter and better now. That saves me time every time I run a simulation, which in turn means I can get more science done.